11 minutes

DigitalOcean Kubernetes Challenge

I’ve recently been doing DigitalOcean’s Kubernetes Challenge, part of which is creating a writeup for the project. So here it is!

DO gave a variety of options for projects in this challenge, I chose to deploy a scalable message queue. The brief was as follows:

A critical component of all the scalable architectures are message queues used to store and distribute messages to multiple parties and introduce buffering. Kafka is widely used in this space and there are multiple operators like Strimzi or to deploy it. For this project, use a sample app to demonstrate how your message queue works.

I’ve been interested in learning a bit more about message queues for a while, especially RabbitMQ (which I decided to use over Kafka), and thought this would be a good opportunity to do so. Additionally, it also looked like a great chance to learn and make use of Pulumi. You can see the code for the project on my GitHub here.

- Creating a DigitalOcean Kubernetes Cluster with Pulumi

- Setting Up the RabbitMQ Operator

- Creating a Sample App

- Going Forward

Creating a DigitalOcean Kubernetes Cluster with Pulumi

Pulumi describes itself in its docs as:

A modern infrastructure as code platform that allows you to use familiar programming languages and tools to build, deploy, and manage cloud infrastructure.

I’ve previously used Terraform at work, and Pulumi serves a similar purpose, but rather than using Hashicorp Configuration Language, Pulumi lets you use a variety of languages; Node, Python, .NET, and Go, which lets you avoid learning a new domain specific language when setting up Infrastructure as Code. This is especially useful for matching the language being used in a larger project; for example, in this project I am using Go, and Pulumi lets me write my infrastructure in Go too.

Pulumi features a registry, allowing various cloud providers to provide packages that can be used to provision infrastructure. Obviously for this challenge I’ll be using DigitalOcean Kubernetes, which is easily done as DO has their own package on the Pulumi registry. My use case is pretty straightforward - I just need a Kubernetes cluster - and the API docs in the registry are helpful, so I can pretty quickly get something like this:

func main() {

pulumi.Run(func(ctx *pulumi.Context) error {

// As you could probably guess, NewKubernetesCluster is the function to create a new cluster,

// passing my context, the name for the cluster, and a config struct.

cluster, err := digitalocean.NewKubernetesCluster(ctx, "roo-k8s", &digitalocean.KubernetesClusterArgs{

// Another config struct for the nodepool, where I set the name, node size, and node count.

NodePool: digitalocean.KubernetesClusterNodePoolArgs{

Name: pulumi.String("roo-k8s-pool"),

Size: pulumi.String("s-2vcpu-4gb"),

NodeCount: pulumi.Int(3),

},

// ... and also set the region and Kubernetes version!

Region: pulumi.String("lon1"),

Version: pulumi.String("1.21.5-do.0"),

})

if err != nil {

return err

}

// We export the Kubeconfig for the cluster, letting us use it later.

ctx.Export("kubeconfig", cluster.KubeConfigs.Index(pulumi.Int(0)).RawConfig())

return nil

})

}

We could customize further, or build out more infrastructure, but this gives us a quick and easy Kubernetes cluster to work with. However, for this to actually provision anything, we need to provide credentials for DO. We can set the DIGITALOCEAN_TOKEN environment variable which will be picked up and used, but Pulumi also lets us store secrets as part of the stack:

$ pulumi config set digitalocean:token XXXXXXXXXXXXXX --secret

This allows anyone with access to the stack to make use of the secret - useful if multiple people are working on a project, or if you’re working across multiple machines.

Now that we’ve set this up, we can simply call pulumi up and get a preview of the changes to be made:

Type Name Plan

+ pulumi:pulumi:Stack do-k8s-challenge-do-k8s create

+ └─ digitalocean:index:KubernetesCluster roo-k8s create

Resources:

+ 2 to create

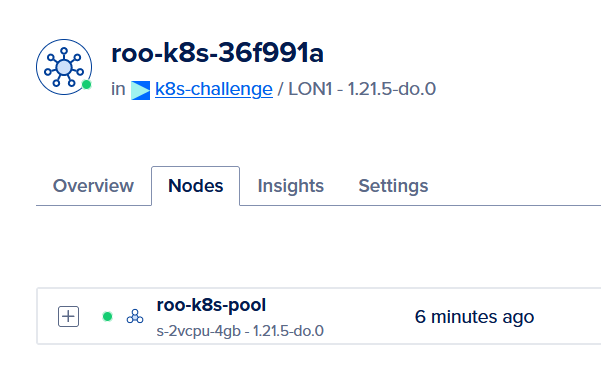

Double check that the plan is what we want (in this case, just create a Pulumi stack, and provision a DO Kubernetes cluster), and accept! Give it a few minutes, and we have a Kubernetes cluster ready to go! For projects like this, where I’m constantly tweaking and re-provisioning infrastructure, the speed at which DO provisions is super useful. To be safe, we can double check that we can see the cluster in the DO UI:

Now we can use pulumi stack output kubeconfig to output our kubeconfig, and use that to connect to our cluster!

And when we’re finished with the cluster, we can easily call pulumi destroy to bring down all the infrastructure we’ve provisioned - super useful for keeping costs down when developing, and making sure we haven’t accidentally created anything and left it running.

Setting Up the RabbitMQ Operator

RabbitMQ provides an Operator for use in Kubernetes - this means we can get RabbitMQ up and running quickly with a few commands, covered in their tutorial. We kubectl apply the operator YAML to create the operator and the required CRDs:

$ kubectl apply -f "https://github.com/rabbitmq/cluster-operator/releases/latest/download/cluster-operator.yml"

namespace/rabbitmq-system created

customresourcedefinition.apiextensions.k8s.io/rabbitmqclusters.rabbitmq.com created

serviceaccount/rabbitmq-cluster-operator created

role.rbac.authorization.k8s.io/rabbitmq-cluster-leader-election-role created

clusterrole.rbac.authorization.k8s.io/rabbitmq-cluster-operator-role created

rolebinding.rbac.authorization.k8s.io/rabbitmq-cluster-leader-election-rolebinding created

clusterrolebinding.rbac.authorization.k8s.io/rabbitmq-cluster-operator-rolebinding created

deployment.apps/rabbitmq-cluster-operator created

Then we can configure and create a RabbitMQ cluster ourselves in another YAML file:

apiVersion: rabbitmq.com/v1beta1

kind: RabbitmqCluster

metadata:

name: roo-test

spec:

# Use the latest image, with an alpine base, for smaller images - quicker deploys!

image: rabbitmq:3.9.11-management-alpine

# 3 replicas - one for each node - helps ensure availability.

replicas: 3

# Resource constraints so that the cluster is quick without consuming all the resources!

resources:

limits:

cpu: 1000m

memory: 1200Mi

Another kubectl apply and we’ve created a RabbitMQ cluster! We can quickly check everything is working properly:

$ kubectl get pods

NAME READY STATUS RESTARTS AGE

roo-test-server-0 1/1 Running 0 56s

roo-test-server-1 1/1 Running 0 56s

roo-test-server-2 1/1 Running 0 56s

Perfect! Additionally, there’s a perf-test image available to check the cluster is functional. I made a simple bash script to grab the username, password, and service for the cluster, pass those parameters to perf-test, and output the logs when ready:

$ ./perf-test.sh

pod/perf-test created

pod/perf-test condition met

id: test-192228-278, time: 1.000s, sent: 3244 msg/s, received: 3294 msg/s, min/median/75th/95th/99th consumer latency: 18511/318671/373425/411837/434261 µs

id: test-192228-278, time: 2.000s, sent: 9034 msg/s, received: 10894 msg/s, min/median/75th/95th/99th consumer latency: 433388/761260/813535/916186/962179 µs

id: test-192228-278, time: 3.000s, sent: 4539 msg/s, received: 19396 msg/s, min/median/75th/95th/99th consumer latency: 635194/956662/1053517/1480561/1801464 µs

We can see our RabbitMQ cluster is functional, and is properly handling messages for the test pod. However, I’d like to delve a bit deeper into RabbitMQ to further my understanding, so instead of using perf-test, I’m going to create my own senders and receivers to work with.

Creating a Sample App

RabbitMQ provides some tutorials, including for Go, which I’m going to follow to build a sample app while learning about RabbitMQ. I’m not going to just repeat everything covered in the tutorials, so we’ll skip over that, but I have made a few crucial changes to the code given in the tutorials; we loop 10 times in the sender program (instead of just sending one message), and construct the connection string (username, password, and IP) from environment variables, rather than hard-coding it.

Now I have two fairly straightforward Go programs; one that is generating 10 random numbers and publishing them to a queue, and another that is reading from the queue. In order for this to run in Kubernetes, we need to containerize the binaries, which is easily done with docker build and a Dockerfile:

FROM golang:1.17-alpine

WORKDIR /app

COPY go.mod ./

COPY go.sum ./

RUN go mod download

COPY send.go ./

RUN go build send.go

CMD ["./send"]

This Dockerfile is for the ‘send’ image, and I do the exact same thing for the receive one (but pointing to the other file). Once they’re built, I can tag and push them to a container registry; in this case Docker Hub:

$ docker tag roothorp/sender roothorp/sender:0.1

$ docker push roothorp/sender:0.1

Now our images are available to the world at large, including our Kubernetes cluster. Adding to the YAML file we created the RabbitMQ cluster with earlier, we can create a deployment for the receiver:

apiVersion: apps/v1

kind: Deployment

metadata:

name: receiver

labels:

app: roo-test

spec:

replicas: 1

selector:

matchLabels:

app: receiver

template:

metadata:

labels:

app: receiver

spec:

containers:

- name: receive

image: roothorp/receiver:0.1

resources:

limits:

cpu: 200m

memory: 200Mi

env:

- name: RABBITMQ_USERNAME

valueFrom:

secretKeyRef:

name: roo-test-default-user

key: username

- name: RABBITMQ_PASSWORD

valueFrom:

secretKeyRef:

name: roo-test-default-user

key: password

A deployment lets us declaratively state how we want our pods, and ensures that state is maintained. In this example we just want a single receiver, but if that pod were to crash, the deployment would restart the pod and ensure it stays up. If we wanted to scale, we can simply increase the replicas and re-apply the yaml, and Kubernetes spins up more pods for us.

Additionally, we’re setting environment variables for the pod here from a secret. Earlier I said that I’d modified the sender and receiver examples to build their connection string from environment variables, and this is why. The RabbitMQ operator sets up a default-user secret containing (amongst other things) the username and password to access the queue, and Kubernetes lets us easily and safely access them here, passing them down to our program. For the service IP, Kubernetes sets environment variables for hosts and ports of services available on the cluster, so we can get the IP for the RabbitMQ service from the ROO_TEST_SERVICE_HOST variable (roo test being the cluster’s name). Connecting to services (as well as doing so via DNS) is covered in more detail in the Kubernetes docs.

For our sender we can do something similar, but this time using a Job instead of a Deployment. Unlike a deployment (which would continually restart the pod), Jobs watch for the pod to complete (exit gracefully), and allow us to set a target number of completions, as well as a parallelism. Once enough pods have completed, the job itself is completed. We’ll add this to our YAML:

apiVersion: batch/v1

kind: Job

metadata:

name: sender

labels:

app: roo-test

spec:

completions: 10

parallelism: 2

template:

metadata:

labels:

app: sender

spec:

restartPolicy: Never

containers:

- name: send

image: roothorp/sender:0.2

resources:

limits:

cpu: 200m

memory: 200Mi

env:

- name: RABBITMQ_USERNAME

valueFrom:

secretKeyRef:

name: roo-test-default-user

key: username

- name: RABBITMQ_PASSWORD

valueFrom:

secretKeyRef:

name: roo-test-default-user

key: password

As you can see, configuration is much the same as for the deployment, but we specify completions and parallelism too. In this case, each run of the sender sends 10 messages, so 10 completions means 100 messages, and 2 parallel just means that we’ll have 2 pods running at any given time.

We can again kubectl apply, and see our pods appear:

$ kubectl get pods

NAME READY STATUS RESTARTS AGE

receiver-6b8874bc68-qgxvz 1/1 Running 0 83m

roo-test-server-0 1/1 Running 1 9m26s

roo-test-server-1 1/1 Running 1 10m

roo-test-server-2 1/1 Running 0 102m

sender-58xm8 0/1 Completed 0 10m

sender-5qqm4 0/1 Completed 0 9m58s

sender-dh46g 0/1 Completed 0 9m55s

sender-fn2gf 0/1 Completed 0 9m51s

sender-g8hb6 0/1 Completed 0 9m52s

sender-kftll 0/1 Completed 0 9m57s

sender-kt46f 0/1 Completed 0 9m56s

sender-lzxzh 0/1 Completed 0 10m

sender-pq86c 0/1 Completed 0 9m54s

sender-qbv8z 0/1 Completed 0 9m53s

Our 10 completed senders from our job, our 3 RabbitMQ pods, and a single receiver pod. We can check the receiver pod’s logs and see the messages arriving (I’ve trimmed them, lest this code block be longer than the rest of the blog post):

$ kubectl logs receiver-6b8874bc68-qgxvz

2021/12/31 03:11:51 Received a message: 81

2021/12/31 03:11:51 Received a message: 887

2021/12/31 03:11:51 Received a message: 847

2021/12/31 03:11:51 Received a message: 59

2021/12/31 03:11:51 Received a message: 81

2021/12/31 03:11:51 Received a message: 318

2021/12/31 03:11:51 Received a message: 425

2021/12/31 03:11:51 Received a message: 540

2021/12/31 03:11:51 Received a message: 456

2021/12/31 03:11:51 Received a message: 300

And there we have our basic Message Queue!

Going Forward

This wraps up a pretty basic RabbitMQ setup and test on DigitalOcean’s Kubernetes offering. There are a handful of things that I’d like to add to this project in the future, which I thought I should quickly cover here (for my sake as much as anyone elses):

Improving the Docker images, primarily their size, is pretty straightforward and would make things move quicker. Currently the images are about 300MB, which is incredibly big for a simple program like the one I’m using here. Utilizing multi-stage builds to build in the larger golang base images before moving to a slimmer image (such as scratch) can drastically reduce the size of images, as well as whittle down the build time thanks to caching.

Furthering my implementation (and understanding) of RabbitMQ. While I got a nice understanding of the basics of RabbitMQ, delving deeper into this project and building out a more comprehensive application that makes use of RabbitMQ (rather than providing an example input and output) sounds interesting and a natural next step. Finishing the provided tutorials and moving on to creating a larger app would be ideal, and a good opportunity to get to grips with other technologies I’m interested in. This would include writing tests for the apps; I neglected to do this for these straigtforward examples, but writing tests is a great habit to get into as I go.

Finally, making use of GitHub actions to automate much of the deployment would be a great thing to learn (and would reduce a lot of repetitive building, pushing, applying). Having a workflow that tests and lints, builds images, brings up or modifies infra, and applies Kubernetes configuration would be perfect!

Hopefully this provided some insight into how I approached this challenge, and perhaps taught somebody something. At the very least, I hope it was an interesting read - if you have any questions or thoughts, feel free to contact me - my details are available on the front page of this site. Thank you, and happy new year!

2291 Words

2021-12-31 00:00